Another example is when one is plotting Y/X against X - for example some weight specific function against an organism's body weight. In this case the spurious correlation tends to be positive. Pearson (1897) was the first to note that two variables having no correlation between themselves become correlated when divided by a third uncorrelated variable. For example two variables (X and Y) may be standardized by dividing by a third variable (Z), and Y/Z is then regressed on X/Z. One common reason for two variables not being independent is when they share a common term. There is, however, general agreement that one cannot use the usual parametric tests of significance in this situation. Prairie & Bird (1989) on the other hand argue that it is not the correlations that are spurious, but the inferences drawn from them. It has been suggested that erroneous conclusions derived from spurious correlations may be more widespread and persistent in the literature than pseudo-replication ever was. This has come to be known as the 'spurious correlation' issue. If the dependent and independent variables, Y and X, are not independent, then regression or correlation analysis may well indicate they are correlated when in fact the relationship derives solely from the presence of a shared variable. This is a controversial topic which has generated considerable debate in the journals. SE b is the standard error of the slope.t is a quantile from the t-distribution for the chosen type I error rate (α) and n-2 degrees of freedom,.b is the slope of the regression of Y on X,.However, the confidence interval of this estimate is rather tedious to obtain as it requires computation of two further statistics, commonly known as D and H.

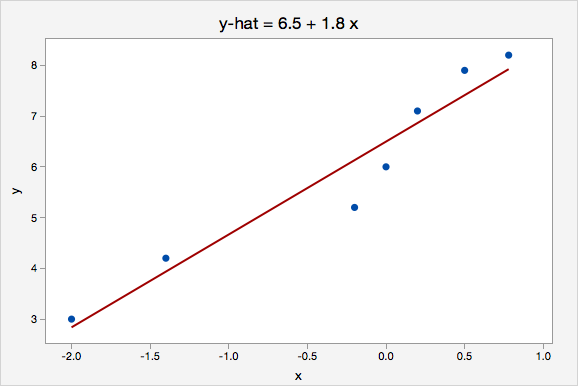

There are several ways the significance of a regression can be tested. a is the estimate of the Y intercept (α ) (the value of Y where X=0).n is the number of bivariate observations.X and Y are the individual observations,.b is the estimate of the slope coefficient (β ),.The parameters of the regression model are estimated from the data using ordinary least squares. Errors on the response variable are assumed to be independent and identically and normally distributed. If there is substantial measurement error on X, and the values of the estimated parameters are of interest, then errors-in-variables regression should be used. The model is still valid if X is random (as is more commonly the case), but only if X is measured without error. In the traditional regression model, values of X-variable are assumed to be fixed by the experimenter. Where β 0 is the y intercept, β 1 is the slope of the line, and ε is a random error term. Where α is the y intercept (the value of Y where X = 0), β is the slope of the line, and ε is a random error term. This is in contrast to correlation where there is no distinction between Y and X in terms of which is an explanatory variable and which a In classical (or asymmetric ) regression one variable (Y) is called the response or dependent variable, and the other (X) is called the explanatory or independent variable. Simple linear regression provides a means to model a straight line relationship between two variables.

0 kommentar(er)

0 kommentar(er)